We were thrilled to welcome Sue Holloway, Chief Executive of Project Oracle, to speak about her experience of developing evidence-informed practice as part of our launch event for Learning about Culture, a major new RSA and EEF programme to support evidence-based cultural learning. Here are the insights that she shared with us on developing and using evidence to improve outcomes for children and young people.

By way of background, Project Oracle is a children and youth evidence hub. This means that we are interested in helping organisations delivering interventions to children and young people to think about, develop and improve the evidence that shows their interventions are making a difference. We started out based in London, funded by the Greater London Authority (GLA), The Mayor’s Office for Policing and Crime (MOPAC) and the Economic and Social Research Council (ESRC), and last year we became a national charity, funded mainly by grant-making trusts and foundations.

The Project Oracle Standards of Evidence

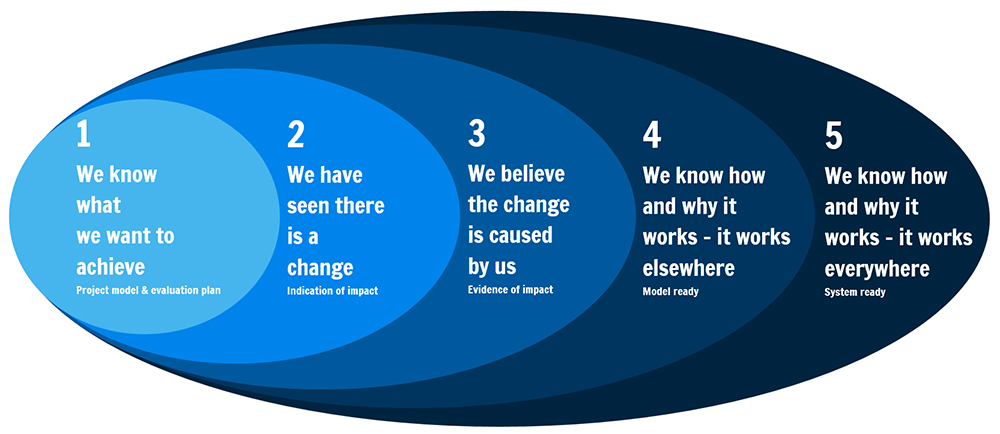

The Project Oracle standards of evidence were developed by the Social Research Unit at Dartington who have a long and distinguished track record in this type of work. As with most of sets of standards you will find, they are loosely based on something called the Maryland Scientific Methods Scale (SMS). This was developed in the 1990s to rank policy evaluations by looking at both how rigorous the evaluation methodology was and, crucially, how well it was carried out. Like the Maryland Scale, the Project Oracle Standards have five levels, but ours are based around a set of statements rather than a particular set of evaluation methods, as shown below.

Let’s explore the first three in detail:

Standard 1 – we know what we want to achieve – the organisation has a good theory of change which makes a logical connection between the activities they are delivering and the change they want to see, and they have a credible plan about how we are going to collect some evidence.

Standard 2 – we have seen there is a change – the organisation has before and after data that shows a change has occurred – we don’t actually know whether this is because of what they are doing, or because of something else entirely going on in the lives of the young people they are working with.

Standard 3 – we believe the change is caused by us – the organisation has evidence that the change would not have occurred without their intervention.

Validation against our standards is something that any youth charity can do and it involves assessing the quality of the evidence supporting these statements. You can hear that each successive statement gives more confidence that the intervention is actually achieving the desired change, and I think it’s been really helpful for the children and youth sector in London, where we started out, to have independent validation of the quality and therefore reliability of the evidence at every stage.

The danger is that this can be seen as a ladder to climb, where the lower rungs have very little value, whereas the reality is that, going back to the Maryland Scale, the quality as well as the nature of the evidence matters a lot. And frankly a lot of high quality before and after evidence at Standard 2, showing that a change is occurring year on year with different groups of young people, is more convincing than a badly matched control group at Standard 3.

Therefore, at Project Oracle, our validations aren’t like marking an exam – you submit your papers and get a pass or fail – it’s about helping organisations to improve the quality of their evidence. We do this by offering training and 1:1 support, as well as bringing organisations together in cohorts to facilitate peer learning and support for this journey.

Building a sectoral approach to evaluation

It has been great to hear (as part of the Learning about Culture launch event) that the cultural learning sector emphasises the use of evaluation for learning, because it is so important to recognise that it’s more than ticking boxes for funders - it’s vital for organisations to understand whether their intervention is making the change they think it is, and how it can be improved to achieve even more. But involving funders in the conversations about what proportionate and feasible evaluation looks like is also crucial if delivery organisations are not to be faced with a raft of differing requirements about the data they should collect for different funders, as is all too often the case.

At Project Oracle we think it’s really important to involve the whole evidence community in conversations about evidence and evaluation: the organisations delivering the interventions and collecting the data, the funders wanting to know that their investment is worthwhile, and the academics and experts who can help with finding new but robust ways to evidence change. This is much easier to do when working with groups of organisations delivering interventions in a similar context and expecting to see change in similar dimensions. So we also work with a cohort in a particular area, and facilitate those conversations with funders about what is reasonable and proportionate. At this very moment we are launching a second Arts cohort focussing on arts education, which we are currently inviting arts organisations that work with young people to apply to!

Sectoral approaches to evaluation are not about developing a one-size fits all straitjacket that only adds to the demands on practitioners and leaves everyone feeling dissatisfied. It’s about trying to get delivery organisations in the first instance to think about what data they can collect and how they can do it efficiently – including learning from their peers about what different organisations have found effective in different contexts – as well as thinking about whether there are tools that everyone can use. Coming together and agreeing on approaches puts delivery organisations in a better position to talk to funders and commissioners about what is feasible and realistic. Ultimately we all want the same thing - to improve outcomes for the beneficiaries, and working together and sharing the learning that comes through the evaluation process is hopefully a good way of doing this.

And remember no-one is starting from scratch and there are many organisations including ourselves that have resources that can help – the Arts Council, Bridge Organisations, EEF, New Philanthropy Capital to name a few. There is no need to reinvent the wheel, but working together we can find new ways build on existing good practice.

Top tips for the cultural learning sector

As the cultural learning sector looks to build a cross-sector approach to research and evaluation, here are my top tips:

1. Look at what different organisations are doing and get the foundations solid – good monitoring is an often neglected bedrock, which can get you a long way if you are delivering the type of intervention which means that you have limited interaction with a large number of people. This is a journey and not everyone has the same destination and that’s OK. The key is to gather high quality evidence at whatever level.

2. Be willing to share – we have so much to learn from each other and if you share with others they will share with you, but that has to be about what hasn’t worked as well as about what’s been successful. Unless there’s a real culture of learning, people won’t be willing to share, but you can’t build a sectoral approach without it.

3. Take this seriously and resource it adequately – it takes time and effort develop good evaluation approaches, and even more time and effort to build consensus around evidence across a sector. This is particularly the case as you build up from what seems sensible to a small number of organisations to how you might roll approaches out more widely. Shared measurement is a great prize, but hard won and involving lots of negotiation and compromise as many organisations are already invested in doing things in a certain way. It’s important to keep as many people involved as possible, but you also need a strong lead to ensure that some progress can be made. None of this comes cheaply, and there has to be some funding to create the space for these conversations to take place.

We would recommend that you start where you are, build solid foundations, be willing to share your learnings with others and resource this work properly.

About the author: Sue is Chief Executive of Project Oracle. Before joining Project Oracle Sue was the Director of Pro Bono Economics for 5 years. She has been a professional economist since 1997 when she joined the Government Economic Service (GES). She worked at the Office for National Statistics, managed the GES, and was Deputy Chief Economist at the Department for International Development. She is also a qualified management accountant, and in the past has worked overseas for TEAR Fund and been a trustee at Traidcraft. Sue holds a BSc in Economics from Royal Holloway, University of London.

Find out more about the Learning about Culture Programme.

Be the first to write a comment

Comments

Please login to post a comment or reply

Don't have an account? Click here to register.