Navigating the new era of generative AI may be the most critical challenge to democracy yet.

We are on the cusp of a new stage in human evolution which will have a profound effect on society and democracy. I call it the ‘era of generative AI’, an epoch in which our relationship with machines will change the very framework of society. Navigating this period of immense change, with both the opportunities and risks that it engenders, will be one of the biggest challenges for both democracy and society in this century.

For the past decade, I have been researching how the development of a new type of AI, so-called ‘generative AI’, will impact humanity. The clue as to why this type of AI is so extraordinary is in its name: an emerging field of machine learning that allows machines to ‘generate’ or create new data or things that did not exist before.

The medium of this new data is any digital format. AI can create everything from synthetic audio to images, text and video. In its application, generative AI can be conceived of as a turbo engine for all information and knowledge. AI will increasingly be used not only to create all digital content, but as an automation layer to drive forward the production of all human intelligent and creative activity.

Picture a creative partner capable of writing riveting stories, composing enchanting music or designing breathtaking visual art. Now imagine this partner as an AI model – a tool that learns from the vast repository of digitised human knowledge, constantly refining its abilities in order to bring our most ambitious dreams to life.

This is generative AI: a digital virtuoso that captures the nuances of human intelligence and applies this to create something new and awe-inspiring – or new and terrifying. Through tapping into the power of deep learning techniques and neural networks, generative AI transcends traditional programming, effectively enabling machines to think, learn and adapt like never before.

This AI revolution is already becoming a fundamental feature of the digital ecosystem, seamlessly deployed into the physical and digital infrastructure of the internet, social media and smartphones. But while generative AI has been in the realm of the possible for less than a decade, it was only last November that it hit the mainstream. The release of ChatGPT – a large ‘language model’ (an AI system that can interpret and generate text) application – was an inflection point.

ChatGPT is now the most popular application of all time. It hit 100 million users within two months and currently averages over 100 million users per month. Almost everyone has a ChatGPT story, from the students using it to write their essays to the doctor using it to summarise patient notes. While there is huge excitement around generative AI, it is simultaneously raising critical concerns around information integrity and brings into question our collective capacity to adapt to the pace of change.

From deepfakes to generative AI

While ‘generative AI’ was only really coined as a term in 2022, my deep dive into this world started in 2017 when I was advising global leaders, including former NATO secretary-general Anders Fogh Rasmussen and (then former vice- president) Joe Biden. The digital ecosystem that we have built over the past 30 years (underpinned by the internet, social media and smartphones) has become an essential ecosystem for business, communication, geopolitics and daily life. While the utopian dream of the Information Age has delivered, its darker underbelly was becoming increasingly evident, and I had spent the better half of a decade examining how the information ecosystem was being weaponised.

This ecosystem has empowered bad actors to engage in crime and political operations far more effectively and with impunity. Cybercrime, for example, is predicted to cost the world $8tn (£6tn) in 2023. If it were measured as a country, cybercrime would be the world’s third-largest economy after the US and China.

But it is not only malicious actors that cause harm in this ecosystem. The sheer volume of information we are dealing with, and our inability to interpret it, also has a dangerous effect. This is a phenomenon known as ‘censorship through noise’; it occurs when there is so much ‘stuff’ that we cannot distinguish or determine which messages we should be listening to.

All this was on my mind when I encountered AI-generated content for the first time in 2017. As the possibility of using AI to create novel data became increasingly viable, enthusiasts started to use this technology to create ‘deepfakes’. A deepfake has come to mean an AI-generated piece of content that simulates someone saying or doing something they never did. Although fake, it looks and sounds authentic.

The ability for AI to clone people’s identity – but more importantly, to generate synthetic content across all forms of digital medium (video, audio, text, images) – is a revolutionary development. This is not merely about AI being used to make fake content – the implications are far more profound. In this new paradigm, AI will be used to power the production of all information.

Above: A still taken from a deepfake video circulated in March 2022 that appeared to show Ukrainian President Volodymyr Zelensky urging the surrender of his forces

Information integrity and existential risk

In my 2020 book, Deepfakes: The Coming Infocalypse, I argued that the advent of AI-generated content would pose serious and existential risks, not only to individuals and businesses, but to democracy itself. Indeed, in the three years since my book was published, we have begun to encounter swathes of AI-generated content ‘in the wild’.

One year ago, at the start of the Russian invasion of Ukraine, a deepfake video of Ukrainian President Volodymyr Zelensky, urging his army to surrender, emerged on social media. If this message had been released at a vitally important moment of the Ukrainian resistance, it could have been devastating. While the video was quickly debunked, this example of weaponised synthetic content is a harbinger of things to come.

Deepfake identity scams – such as one in which crypto-scammers impersonated Tesla CEO Elon Musk – made more than $1.7m (£1.4m) in six months in 2021, according to the US Federal Trade Commission. Meanwhile, a new type of fraud (dubbed ‘phantom fraud’), in which scammers use deepfake identities to accrue debt and launder money, has already resulted in losses of roughly $3.4bn (£2.7bn).

Cumulatively, the proliferation of AI-generated content has a profound effect on digital trust. We were already struggling with the health of our information ecosystem before AI came into the equation. But what does it mean for democracy, and society, if everything we consume online – the main diet feeding our brains – can be generated by artificial intelligence? How will we know what to trust? How will we differentiate between authentic and synthetic content?

Safeguarding the integrity of the information ecosystem is a fundamental priority not only for democracy, but for society as a whole. Not only can everything be ‘faked’ by AI, but the fact that AI can now ‘synthesise’ any digital content also means that authentic content (for example, a video documenting a human rights abuse or a politician accepting a bribe) can be decried as ‘synthetic’ or ‘AI-generated’ – a phenomenon known as ‘the liar’s dividend’.

The core risk to democracy is a future in which AI is used as an engine to power all information and knowledge – consequently degrading trust in the medium of digital information itself.

But democracy (and society) cannot function if we cannot find a medium of information and communication that we can all agree to trust. It is therefore vital that we get serious about information integrity as AI becomes a core part of our information ecosystem.

Solutions: authentication of information

There are both technical and ‘societal’ ways in which to do this. One of the most promising approaches, in my view, is content authentication. Rather than trying to ‘detect’ everything that is made by AI (which will be futile if AI drives all information creation in the future, anyway), we embed the architecture of ‘authentication’ into the framework of the internet itself. This should be created with a cryptographic marker so its origin and mode of creation (whether it was made by AI or not) can always be verified. This kind of cryptography is embedded in the ‘DNA’ of the content, so it is not just a watermark – it is baked in and cannot be removed or faked.

But simply ‘signing’ content in this way is not enough. We also must adopt an open standard to allow that ‘DNA’ or mark of authentication to be seen whenever we engage with content across the internet – whether via email, YouTube or social media. This open standard for media authentication is already being developed by the Coalition for Content Provenance and Authenticity (C2PA), a non-profit organisation that counts the BBC, Microsoft, Adobe and Intel among its members.

Ultimately, this approach is about radical transparency in information. Rather than adjudicating the truth (a fool’s errand), it is about allowing everyone to make their own trust decisions based on context. Just as I want to see a label that tells me what goes into the food I eat, society needs to have the digital infrastructure in place that allows individuals to determine how to judge or trust the online information that fuels almost every single decision we make.

Building societal resilience

But the solutions cannot be solved by technology alone. We can build the tools for signing content, and the open standard to verify information across the internet, but the bigger challenge is understanding that we stand on the precipice of a very different world – one in which exponential technologies are going to change the very framework of society.

This means that old ways of thinking need to be updated. Our analogue systems are no longer fit for purpose. We must reconceptualise what it means to be citizens of a vibrant democracy, and understanding is the first step. Ultimately, this is not a story about technology – this is a story about humanity.

While the recent advances in AI have kicked off much discussion about the advent of ‘artificial general intelligence’ (AGI, ie the point at which machines take over as they become smarter than humans) we are not there – yet. We still have the agency to decide how AI is integrated into our society, and that is our responsibility. As a democracy, this challenge is one of the most important of our time – we must not squander our chance to get it right.

Nina Schick is an author, entrepreneur and advisor specialising in generative AI. Previously, Nina worked advising leaders on geopolitical crises including Brexit, the Russia–Ukraine war and state-sponsored disinformation

This article first appeared in RSA Journal Issue 2 2023.

To correct this error:

- Ensure that you have a valid license file for the site configuration.

- Store the license file in the application directory.

Read more Journal and Comment articles

-

Worlds apart

Comment

Frank Gaffikin

We are at an inflexion point as a species with an increasing need for collaborative responses to the global crises we face.

-

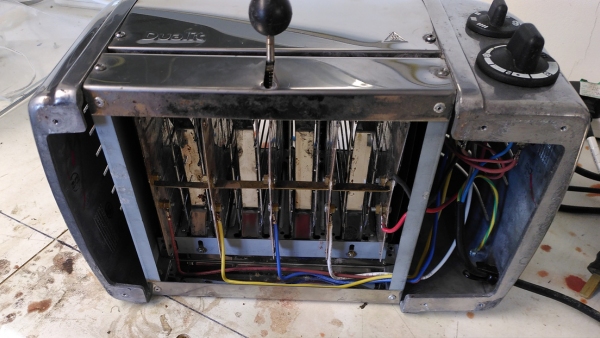

Why aren't consumer durables durable?

Comment

Moray MacPhail

A tale of two toasters demonstrates the trade-offs that need to be considered when we're thinking about the long-term costs of how and what we consume.

-

You talked, we listened

Comment

Mike Thatcher

The RSA responds to feedback on the Journal from over 2,000 Fellows who completed a recent reader survey.

Be the first to write a comment

Comments

Please login to post a comment or reply

Don't have an account? Click here to register.